By Anna Poletti and Simon Cooper

© all rights reserved.

Excellence in Research for Australia (ERA) honours the Australian government’s promise to create a better research quality assurance system. ERA will be streamlined, transparent, internationally verifiable, cost-effective, and based on quality measures appropriate to each discipline. It will compare Australian researchers not just with each other, but with the best in the world. … The government wants to craft a system that has the support of researchers and universities. … The more feedback we get, the better ERA will be.

Senator the Hon. Kim Carr, Minister for Innovation, Industry, Science and Research. June 2008.

This article examines the results of a Freedom of Information (FOI) request into the journal ranking process that took place as part of the Excellence in Research for Australia (ERA) project between 2008-2010. We discuss the background to the request and the information that was received. We analyse the data, as well as the gaps in the data, relating this to the rankings process with respect to 13 journals in the Humanities and Creative Arts (HCA) cluster. We note some anomalies in the rankings of specific journals and relate this to the information about the ranking process. We conclude by commenting on the degree to which the FOI request sheds light on what was essentially a secretive process, and whether academics ought to have confidence in exercises that attempt to fix quality on such a large scale. We suggest that the FOI data demonstrates that attempts to produce and fix a quantitative measure of quality in the Humanities publishing cannot adequately account for the diversity of opinion or approaches that constitute the field.1

1. The Freedom of Information Request

Why we used FOI

We began the process of seeking information about the journal rankings process through Freedom of Information shortly after the release of the finalised ERA rankings list in 2010. We decided to pursue a FOI request after it was made clear that the ARC would not discuss the rankings of individual journals, a reasonable position given the size of the list (20,712 journals in 2010). There was, however, considerable consternation in the life writing studies community—in Australia and overseas—regarding the fluctuation in rankings of the longstanding and well respected journal Biography: An Interdisciplinary Quarterly which had been ranked as an A* journal in the publicly released second draft of the list, and been given a ranking of C in the finalised list.

Most of the members of the scholarly community interested in Biography assumed this final ranking was a mistake, the result of a data entry or typographical error. However, once it became clear that no discussion would be entered into, we had to try to come to terms with how this mistake would impact life writing scholars in Australia, from senior researchers to early career researchers. In the interest of transparency, we acknowledge that the Freedom of Information request was partly motivated by self-interest in regards to the question of Biography’s ranking. One of the authors of this article had been published twice in the journal at the time the final rankings were released. However, we knew that Biography was not the only field-defining and long running international journal that had inexplicably been given a low rank. Screen, the film studies journal credited by many with being foundational to the formation of cinema studies as an academic discipline, was ranked B. To anyone with even a passing interest in the discipline this is not reflective of the status of the journal in the field. We believed that this policy of not discussing individual finalised rankings was understandable given the volume of journals the ARC had ranked, but given the importance of the rankings both in influencing future funding decisions, and their implementation in a range of bureaucratic regimes in the University sector, we nevertheless felt that such a policy needed to be challenged. Interestingly, it was this second ‘unintended use’ of the rankings—in areas such as performance management and decisions regarding academic appointments—that was cited by Senator Carr as a key reason for abandoning the ranking process (Rowbotham).

The documents we asked for

The first version of the request we submitted to the ARC asked for all documents relating to the rankings process for the 2010 Ranked Journal List for Humanities and Creative Arts Cluster. The ARC’s response was that such a request related to the following type and volume of documents:

- Over 65,000 pieces of feedback from over 130 representative groups in the 2008 public consultation process

- Over 40,000 pieces of feedback from the 2009 consultation process, from over 700 reviewers and over 60 peak bodies

- Details of over 40,000 journal entries in the ARC’s database

- 6 cluster finalisation workshops with an average of 12 participants, commenting on approximately 2,000 journals in each workshop.

Here then was the first piece of evidence, on ARC letterhead, of the size and complexity of the undertaking the ARC had set itself. The sheer volume of documents was impracticable for an FOI request or, we suspected, for a Government department to process with accuracy. This response to our first request did help us understand the types of documents available however, and so we decided to narrow our request. The volume of documents available led us to decide on requesting as much documentation as we knew existed regarding the journal rankings process in relation to 13 journals. We nominated in our revised Freedom of Information request six journals—ARIEL, a/b: Auto/Biography Studies, Biography: An Interdisciplinary Quarterly, Life Writing, Mosaic and Prose Studies—that constitute the main journals where literary studies research into life writing is published. We chose these six journals in the hope of getting some sense of how the journal rankings worked across a field of research (in this case, the field of life writing research). We also nominated pairings of journals in other fields: media studies (Media International Australia and Southern Review), feminist theory (Australian Feminist Studies and Outskirts), and cinema studies (Screen and Screening the Past). Finally we requested the documentation relating to Overland, a broad-based literary magazine that also deals with wider themes in the humanities. (Similar publications such as Griffith REVIEW, Meanjin and HEAT had been taken out in the drafting process). We were interested in how this publication’s self-description as a ‘magazine’, albeit one that contained comprehensive and scholarly material, might be dealt with in the ranking process.

Throughout the process, the ARC’s in-house solicitor was helpful in assisting us understand the need to refine our request, and without their assistance (which is legislated in the Freedom of Information Act) we would not have got far at all. We also received some advice from a friend with legal training and an experienced journalist.

Once our revised request was submitted, the ARC advised that the cost of processing our request would be several thousands of dollars. If we were unwilling to pay this charge we could make a submission to the ARC requesting the charge be waived because the information we were seeking was in the public interest. We made such a submission, and the application to have the charge waived was successful.

The information released

The process of negotiating and finalising the Freedom of Information request took a year, with the documents being released to us in early April 2011 (a month and a half before the announcement on 30 May 2011 by Senator Kim Carr that the journal rankings process was being abandoned). We received 231 pages of information, and a key that identified the documents and whether or not pieces of information had been excluded, and on what grounds documents or information was excluded.

We received the following types of documents on the nominated journals:

1) the entry relating to the journal in the ARC’s journal database: this includes FoR codes (Field of Research codes); ranking given; number of submissions made regarding the journal and the rank recommended in each submission; notes made by ARC staff regarding the journal’s status

2) entries relating to the nominated journals in spreadsheets returned by ARC appointed reviewers of journal rankings

3)

emails instructing ARC appointed reviewers on how to conduct the review of the proposed rankings and how to provide feedback (anonymised and redacted)

4)

every submission made during the public consultation process made for each journal (anonymised)

5)

powerpoint slides introducing the HCA cluster journal ranking process

6)

emails between ARC staff and expert reviewers (anonymised, edited and redacted).

The following information and documents were not released:

1) Emails regarding engagement of people to rank journals.

Reason given: ‘Provision of the information in the document would disclose the deliberations of ARC staff in relation to the candidature of people to participate as assessors in ERA ranking. Various public interest factors for and against disclosure of the document were weighed up in order to determine whether or not disclosure would be contrary to the public interest. The ARC contends that release of this material would disclose information in the nature of the deliberations and this disclosure would prejudice the effectiveness of the ERA Ranking process. Disclosure of such material was determined, on balance, not to be in the public interest and is considered exempt under s47C’.

Unlike other types of information defined under the ‘Conditional Exemption’ descriptions of the Federal Freedom of Information Act, deliberative processes (47C) does not include any requirement for the agency to prove harm can result from the release of the information.2

2) Information regarding the steps undertaken by the ARC and its assessors in the ERA ranking process.

Reason given: ‘The documents over which exemption is claimed details the steps undertaken by the ARC and its assessors in the ERA ranking process. This information is part of the ERA assessment of the journal. Release of this information would be contrary to the public interest. It is not in the public interest to release details of the specific steps taken, and the process undertaken in relation to the ranking of a Journal. … The ARC contends that release of this material would prejudice the effectiveness of the ERA Ranking process. Disclosure of such materials was determined, on balance, not to be in the public interest and is considered example under s47E’.

Section 47E of the Federal Freedom of Information act permits agencies to not release information ‘where disclosure would, or could, reasonably be expected to, prejudice or have a substantial adverse effect on certain listed agency operations’.3 In this case, we infer that the ARC excludes some information on the basis that it may prejudice the ARC’s ability to undertake the ERA ranking process as instructed by the Minister.

The use of these exemptions raises interesting questions given that, in this instance, a government agency is seeking to instrumentalize the intellectual practices of the research community in order to create methods for evaluating the work done by the community. In academic culture, giving others access to deliberative processes is fundamental to research practice. The research community’s ability to understand and have confidence in the ARC’s process could be limited by the ARC’s protection of its deliberative processes given its status as a government agency. This culture clash is not new, as access to deliberative processes regarding the awarding of research grants has long been protected. However, the extension of the ARC’s scope in defining research quality to include a qualitative measure of all outlets for the reporting of academic research does heighten the potential for tension between the sector and the agency regarding transparency.4

2. What the data might tell us

We recognise that Freedom of Information is not a commonly used research method in the humanities. In interpreting these documents we have drawn on techniques that include content analysis, textual analysis, and semiotics. Because the information released is necessarily partial we have combined these methods with a degree of speculation and inference, drawing on the larger context through which the ERA and other schemes of research accountability have been produced and discussed. We recognise that claims to knowledge based on these documents and their interpretation are limited, but it is our hope that they create the space for more discussion and debate regarding the measurement of research quality in Australian higher education.

Changes in rank across the 3 stages of ranking: notable shifts5

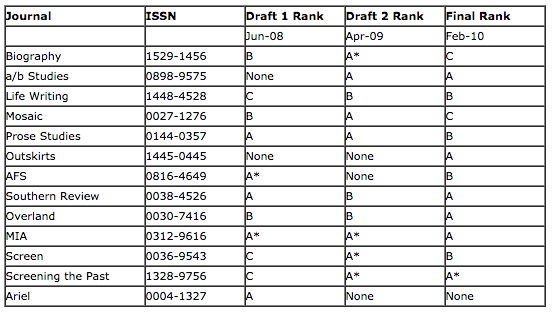

After the release of the first draft of the ranking, the ARC opened up the ranking process to public consultation. A second draft was then released in April 2009, presumably reflecting to some extent the submissions made, and a final list was made public in February 2010. The table below shows the changes in rankings through each stage of the process.

While some of the journals remained relatively stable in terms of their rank there were some remarkable shifts, notably Biography, Mosaic, Screen, Screening the Past and Outskirts. In the transition between the second and final stages where public submissions were taken, the most dramatic shift occurred with Biography, which dropped from the highest ranking to the lowest. For those in the academic community the effects of such changes are regarded as highly consequential. It quickly became clear that C ranking could spell the end of a journal, no matter how long-running or influential; for example, the public demise of the Australian journal People and Place (Lane). The rankings had a rapid impact upon academics, who began to make decisions about where to publish according to the journal’s rank. Thus to drop from an A* to a C in the case of Biography was potentially devastating. Such shifts created a sense of unease in the academic community. How could journals move from being regarded as ‘leaders in their field’ to the lowest quality or vice-versa in a single drafting process? We hoped that the FOI process might shed some light on how the rankings were made and altered in each phase.

One factor that could contribute to the significant shift in rankings is the limits put on the number of journals which could achieve the top tier ranks. As Genoni and Haddow explain:

Four ‘tiers’ have been instituted for ERA ranking, with each tier incorporating an approximate percentage of the titles within a discipline. These are A* (top 5%); A (next 15%); B (next 30%); and C (next 50%).

This raises the larger question around any attempt to fix quality on a mass scale, namely the idea of quality as a consequence of the artificial production of scarcity. The determining relationship between scarcity and quality should be interrogated in discussion of ranking processes. In not releasing information regarding its deliberative processes, the ARC leaves the sector to speculate about how the necessity for scarcity is applied in each FoR category.

3. Analysing the Data

We have attempted to order the information received into the following categories: public submissions, ‘details of [ranking] decision’, reviewing process instructions, reviewing process quantifiable comments, reviewing process qualitative comments by reviewers. We have grouped the information into tables and have provided a degree of analysis and discussion where possible. This information is to be read in conjunction with the various stages in ranking and the shifts in individual journal rank.

3.1 Public Submissions

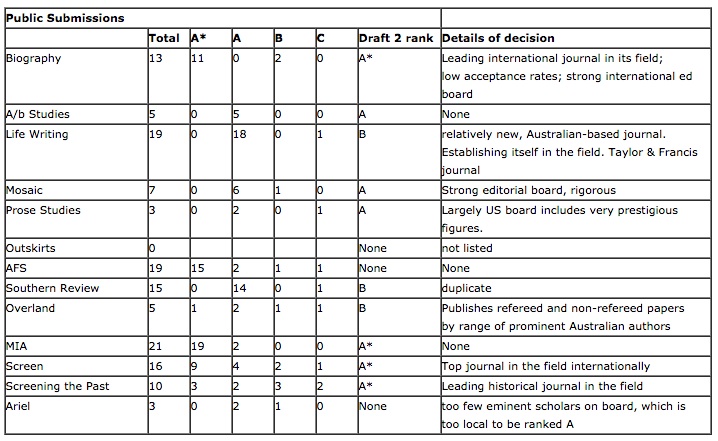

Public submissions for each journal were made between the first and second stages of the ranking process. We try to understand the weighting given to recommendations for the rank of journals made by public submission and see if there is any association between the changes in rank to journals as made by the ARC.

Is there any association between the public submissions and the change in ranking between the first and second drafts?

In the case of Biography, Mosaic, Prose studies, Screen, MIA and Auto/Biography studies, the weighting of the submissions reflects the change in rank, the continuity in rank, or the new (first-time) ranking. Some journals showed a weaker (but nevertheless plausible) connection between the submissions and rank such as Life Writing and Overland.

In the case of two journals there was little or no correlation between the submissions and the ranking. Southern Review moved from an A to a B. This is the only overt shift downward in the ranking amongst the journals at this stage. It occurs despite 14 submissions recommending an A ranking and only 1 submission recommending a C. Screening the Past rose from a C to an A*. 3 submissions recommended an A*, 2 an A, 3 a B and 2 a C. This mixture does not reflect the change in ranking.

There were some anomalies worth noting. Australian Feminist Studies moved from an A* ranking to having no ranking at all. The journal received a substantial number of submissions recommending an A* ranking but we are not given any information in the FOI documents about why the journal received no ranking in the 2nd draft stage. Outskirts was not listed at this stage in the rankings and thus had no submissions. (Interestingly this journal receives an A in the final draft.)

In the absence of any other information about process or decision-making criteria we are left to speculate on the significance of the public submission process on the second round of rankings. If public submissions did have an impact (and there seems to be a correlative effect in some cases) we do not know if it was the number of submissions, the quality and content of the submission, or the author(s) of the submission that carried force. The only other evidence for decision making at this period comes from an email dated 20th February 2009 from ‘ARC-ERA journals’ to a list of recipients (redacted).6 The email is to ‘Professors’ who are members of the ‘Studies in Creative Arts and Writing Round Table’. The email mentions a list of journals that reflected the ‘suggested changes’ made by the members. We do not know anything about how the changes were made, or what guidelines were used. The email asks members to declare any conflict of interest with the journals on the list and reflects ‘I appreciate that we were doing this on the day, but I want to ensure that our records were correct’. This suggests that between stage one and two of the rankings at least some of the changes were made in a group forum and perhaps in the space of a single day.

3.2 ‘Details of Decision’

The information recording the details of the decision based on the public submission process is included (verbatim) in Table 2. In the FOI documents this was included with the information on public submissions in relation to each journal. It suggests the reason the ARC reviewers chose to amend the ranking of the journal or maintain the current ranking. In terms of actual ‘details’ the information released is minimal. In several cases no details are given. In other cases mention is made of the composition of the editorial board (4 mentions) with the ranking of the journal proportional to the international composition of the editorial board or the prestige of the board members. In 3 cases the ranking decision is made around the assertion that the journal is ‘leading’ its field or ‘top in the field’ but we are not given any information as to how such claims are justified. In one case ‘low acceptance rates’ are given as the reason to elevate a ranking but acceptance rates do not factor in the comments relating to any other journals. Nor are we privy to how (or even if) this is measured by the ARC or reviewers. That another journal contains a mixture of refereed and non-refereed articles perhaps indicates why it is ranked at a low level but we do not see such criteria operating elsewhere. The same can be said for the pejorative category of being ‘local’. Anyone looking for a set of consistent measures in such details would be disappointed in the partial quality of the reasons given for ranking journals at this stage.

3.3 Reviewing process instructions

This section examines the evidence of input from the ARC reviewers from the received documents. As mentioned above the FOI documents contained information about recording ARC reviewer comments in a spreadsheet. The instructions are all dated after the second draft of the journal rankings was published. Most of the emails giving instructions to reviewers occur in November 2009. Each reviewer was instructed to complete a spreadsheet. The spreadsheet contained a set of columns containing existing information about the journal in question (title, existing rank, FoR codes). The ARC reviewer was instructed not to make any changes to these columns. There were also a set of blank columns. These columns were the spaces where the ARC reviewer could comment on the rank and status of the journal. The columns contained the following headings:

- Reviewer conflict of interest

- Reviewer agree with current status (ie FoR codes, starting year of publication etc)

- Reviewer Rank (if the reviewer suggested a different rank they could indicate it here)

- Reviewer FoRs (reviewer could add/delete FoRs)

- Reviewer Comments (‘this is a free text free (sic) for you to provide supporting information or other comments eg peer review status, ISSN or title’)

- Reviewers were instructed to ‘place any comments in these columns’ and that ‘the ARC will only be uploading these columns, so please ensure that all changes are captures (sic) in these columns’

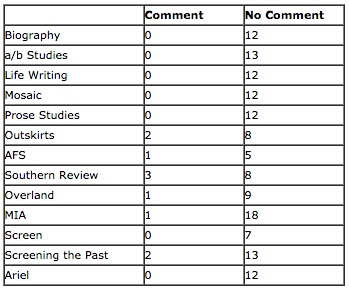

We can assume from the number of these emails to reviewers, the identical instructions for the spreadsheet and the fact that it is emphasized that the ‘ARC will only be uploading these comments’ that the spreadsheet was thought to be significant in the ranking process. The FOI documents contained 25 of these spreadsheets and the table below summarizes the input of the ARC reviewers with respect to this spreadsheet.

3.4 Reviewing process: quantifiable comments on specific journals

The first thing that stands out about each reviewer’s relation to the spreadsheet is the significant lack of comment. Seven of the journals (Biography, a/b Studies, Life Writing, Mosaic, Prose Studies, Screen and Ariel) receive no comment, indeed no input at all from the ARC reviewers. In relation to the other journals there is a stark disproportion between reviewer comment and the number of reviewers who chose not to comment.

In terms of numerical data, and remembering that ARC reviewers were specifically instructed to complete the spreadsheet and that the spreadsheet would be uploaded into the ARC database (giving this a certain evidentiary force), it is significant that there is very little input by the ARC reviewers into the spreadsheet at all. Readers might speculate upon the motivations behind such minimal intervention: were reviewers reluctant to intervene at all, or were they more comfortable in other contexts (roundtables, verbal discussion etc)?

Finally there is nothing in the FOI documents that would explain the dramatic shifts in ranking from the second to final stage of the process. For instance Biography, which moved from an A* to a C (the maximum range downward) or Mosaic which moved from an A to a C; in both cases there is no documented intervention from the ARC reviewers at all. Yet something caused both journals to be dramatically downgraded. Outskirts is again an anomaly. Its current ranking is given as A yet elsewhere (in publically released drafts) it is not ranked at all.

3.5 Specific Comments by Reviewers

The FOI documents reveal around 12-18 potential reviewers for each journal yet for 13 journals there are only six comments in total. Seven of the journals changed rank, yet we received little data to indicate why these shifts took place. The reviewer comments are listed below:

Overland ‘more a mag than a journal’ (recommends shift from A to B)

Southern Review ‘solid and long-lived reputation, strict refereeing, good editors’ (recommends shift from B to A); ‘has become … more defined by Communication and Media studies and by Cultural studies. Politics is more understood as cultural politics. It is the best communications and Media Studies journal after MIA’

Outskirts ‘not many quality articles. Parochial’ (recommends shift from A to B)7

MIA ‘Australia’s leading Media Studies journal. It is analogous to Media Culture and Society and Journal of Communication as int’l comparators. It was recently accepted for Web of Science. It has shaped Australian discussion and sometimes public debate since … 1978. It is easily the most respected journal from among Australian published film, television and digital media journals. Its ranking should reflect this’ (recommends shift from A to A*)

Screening the Past ‘the journal is increasingly focussed on media histories. A good journal’

Most of the reviewers’ comments work as assertions (‘a good journal’ or ‘parochial’) rather than justifying a change of rank through evidence. It is instructive to compare this to the categories that structured the public submissions where contributors were invited to cite more specific indicators of quality. From what evidence we have here, it seemed that ARC reviewers were not required to provide the same justification. What also seems clear is that the decision to alter journal rankings took place outside of what documented evidence we have. Despite the attempts by the ARC to create a template where reviewer decisions could be lodged, along with comments or reasons, the available evidence indicates the ranking process remained opaque and an ‘insider’ secret. A tension is created between the activities of the ARC as a government body, which can protect the efficacy of its programs by not disclosing key information about its decision making processes, and the practice of scholars as reviewers of quality in an environment where they are accountable to their peers. Such outcomes do not create confidence in a process designed to create faith through transparency. Beyond this we learn little about how ‘quality’ is determined by those involved in the ranking process. The dearth of actual comments, along with the lack of specificity revealed in the small number of actual comments we have access to, results in the notion of quality remaining shrouded in conjecture.

4. Summary of Findings

Give the selective nature of the documents we received, we make the following observations:

- The sheer volume of documents involved in the journal ranking process suggests the logistical difficulty of any single government department being able to manage the process effectively.

- There is no evidence that reviewers consistently filled out journal ranking spreadsheets. If reviewers did follow the instructions from the ARC (or followed a different set of instructions) the process remains invisible.

- The relationship between the public submission process and the ARC reviewers remains unclarified.

- Individual rankings do not seem supported (eg. Biography).

- One journal is inconsistently represented throughout the process (Outskirts).

- Indications of reasons for ranking decision or specific reviewer comments, to the extent that they exist in the FOI documents, indicate the vagueness of the approach. Assertions of journal quality—‘leader in the field’, ‘top journal’, ‘rigorous’—remain unsupported by any evidence.

While the FOI request has provided some information with respect to the ranking process, any attempt to gain a reliable and comprehensive understanding of how and why journals were ranked across the three stages remains a frustrating experience. For an exercise aimed at producing ‘transparency’ the results are disappointingly opaque. The kinds of anomalies that prompted the initial request, such as the ranking of Biography, remain as mysterious as ever. There remain substantial gaps in the information received and where we do receive specific indications of how quality is measured (reviewer comments etc) they remain vague and ad hoc in nature. Perhaps this is reflective of the peer-review process in general, where most academics have firsthand experience of conflicting views on what constitutes quality work (Cooper and Poletti). We think, however, this raises larger questions regarding the decision to attempt such an exercise in the first place. The FOI data demonstrates that attempts to produce and fix a quantitative measure of quality in Humanities publishing cannot adequately account for the diversity of opinion and approaches that constitutes the field.

The large volume of documents required in an exercise such as this, alongside the large amount of human resources in collating, judging and ranking submissions and evidence, suggests that the task set for the ARC—identifying and ranking every peer reviewed publication in the English-speaking world—can only be a partial and at times necessarily inaccurate attempt to fix the elusive issue of ‘quality’. Unlike the process of peer-review, where reviewer feedback and argument is evident in the decision process, or metrics, where at least errors and flaws in methodology can be exposed, we are still largely unsure as to what determined the ranks of individual journals in the ERA exercise. With the journal rankings now officially abandoned, there is plenty of anecdotal evidence that the lists of ranked journals are still being used as measures of performance by institutions. If this is the case the situation is made even worse by the fact that appeals to change a ranking can no longer occur. Finally, it is evident from this study that any insight as to how the rankings were arrived at remains elusive to academics and members of the public.

Anna Poletti is a lecturer in Literary Studies and co-director of the Centre for The Book, Monash University. She is the author of Intimate Ephemera: Reading Young Lives in Australian Zine Culture (MUP) and co-editor of the forthcoming collection Identity Technologies (U Wisconsin Press).

Simon Cooper teaches in communications at Monash University and is an editor of Arena Journal. His publications include Technoculture & Critical Theory: In the Service of the Machine? (Routledge) and Scholars and Entrepreneurs: The Universities in Crisis (Arena Publications).

Acknowledgements

The authors would like to thank the two anonymous reviewers for their feedback and Alex Burns, Grania Sheehan, Tim O’Farrell and Marni Cordell for their assistance in the research and preparation of this article.

Notes

1 This paper contributes to a larger debate around the ERA and the journal ranking process. The literature on the ERA and journal ranking is rapidly growing and we cannot include all contributions to this debate. For general statements by Minister Carr on the ERA see Carr, ‘In search of research excellence’ and ‘New era in research’. A sample of the more critically engaged responses can be found in Cooper and Poletti; Genoni and Haddow; Redden; S. Young et. al.

2 See 6.59, in ‘Conditional Exemptions’ http://www.oaic.gov.au/publications/guidelines/guidelines-s93a-foi-act_part6_conditional-exemptions.html#_Toc286928230

3 ‘Certain operations of Agencies’ http://www.oaic.gov.au/publications/guidelines/guidelines-s93a-foi-act_part6_conditional-exemptions.html#_Toc286928230

4 This is reflected in the submission by the Australian Academy of Humanities to the ERA Consultation in March 2011: ’Given what is at stake, there must be transparency not only around journal rankings and other metrics, but also about how the final scores are put together‘ (5).

5 Definition of ranks:

Overall criterion: Quality of the papers

A*

Typically an A* journal would be one of the best in its field or subfield in which to publish and would typically cover the entire field/subfield. Virtually all papers they publish will be of a very high quality. These are journals where most of the work is important (it will really shape the field) and where researchers boast about getting accepted. Acceptance rates would typically be low and the editorial board would be dominated by field leaders, including many from top institutions.

A

The majority of papers in a Tier A journal will be of very high quality. Publishing in an A journal would enhance the author’s standing, showing they have real engagement with the global research community and that they have something to say about problems of some significance. Typical signs of an A journal are lowish acceptance rates and an editorial board which includes a reasonable fraction of well known researchers from top institutions.

B

Tier B covers journals with a solid, though not outstanding, reputation. Generally, in a Tier B journal, one would expect only a few papers of very high quality. They are often important outlets for the work of PhD students and early career researchers. Typical examples would be regional journals with high acceptance rates, and editorial boards that have few leading researchers from top international institutions.

C

Tier C includes quality, peer reviewed, journals that do not meet the criteria of the higher tiers.In this article, when we refer to a journal as ‘not ranked’ in our discussion, the journal does not appear in the journal ranking list.

6 p.33 of the FOI documents

7 There was no public knowledge of Outskirts’ rank at stage 2.

Works Cited

Carr, Kim. ‘In search of research excellence.’ Science Alert 30 March 2008. http://www.sciencealert.com.au/opinions/20082703-17104.html Accessed 30 Oct 2012.

Carr, Kim. ‘New era in research will cut the red tape.’ The Australian, 15 July 2009. http://www.theaustralian.com.au/news/new-era-in-research-will-cut-the-red-tape/story-e6frg6n6-1225750118068 Accessed 30 Oct 2012.

Cooper, Simon, and Anna Poletti. ‘The New ERA of Journal Ranking: The Consequences of Australia’s Fraught Encounter with “Quality”.’ Australian Universities’ Review 53.1 (2011): 57.

Genoni, Paul, and Gaby Haddow. ‘ERA and the Ranking of Australian Humanities Journals.’ Australian Humanities Review 46 (May 2009). https://australianhumanitiesreview.org/archive/Issue-May-2009/genoni&haddow.htm Accessed 30 Oct 2012.

Lane, Bernard. ‘Global Focus Stills Vital Local Voice.’ The Australian, 2 March 2011. http://www.theaustralian.com.au/higher-education/global-focus-stills-vital-local-voice/story-e6frgcjx-1226014345226 Accessed 30 Oct 2012.

Redden, Guy. ‘From RAE to ERA: Research Evaluation at Work in the Corporate University.’ Australian Humanities Review 45 (Nov 2008). https://australianhumanitiesreview.org/archive/Issue-November-2008/redden.html Accessed 30 Oct 2012.

Rowbotham, Jill. ‘End of an ERA: Journal Rankings Dropped.’ The Australian Higher Education Supplement,30 May 2011. http://www.theaustralian.com.au/higher-education/end-of-an-era-journal-rankings-dropped/story-e6frgcjx-1226065864847 Accessed 30 Oct 2012.

Young, S., D. Peetz and M. Marais. ‘The Impact of Journal Ranking Fetishism on Australian Policy-Related Research: A Case Study.’ Australian Universities’ Review 53.2 (2011): 77-87.